Email Marketing Benchmark Recommends

How to Do A/B Testing and Why You Should

February 15, 2023 7 min read

If you’re in marketing, chances are you’ve heard about A/B testing. As for whether you’ve actually had time to put it into practice, that’s a whole other story.

For small business owners or marketers who are trying to do everything themselves, it can be tough to add yet another task to the ever-revolving list of marketing must-dos. But A/B testing has a ton of utility in terms of helping your email marketing efforts succeed — and it’s often the easiest path to determining what is and what isn’t going to work for you.

If you’re new to A/B testing — or if you’re just not confident you have the time to put it into practice — we’re here to help. Keep reading to learn about the basics of A/B testing, including why it’s important and how to implement it in your email marketing strategy.

What is A/B Testing?

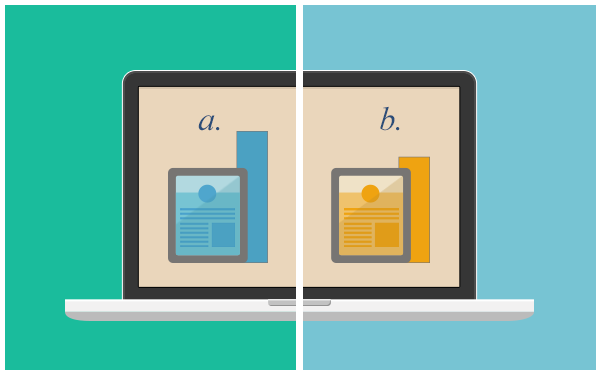

A/B testing, also known as split testing, is a randomized marketing experiment that compares the outcomes of two methods: the A method and the B method.

This type of two-sample hypothesis testing is used widely by marketers, especially when it comes to email marketing. It allows you to test various features and formats and see which performs better with your audience. It can be used to compare results on everything from subject lines and body copy to image and CTA placement.

A/B Test Vs. Split Test

We often get asked the question: is there a difference between an A/B test and a split test?

The answer to this question depends on which marketing professional you ask! Although A/B and split tests have been used interchangeably for a long time, the term “split test” might be more frequently used when a larger element is different.

So, for example, an A/B test focuses on showing the same version of an email, with changes to certain minor elements. Essentially, the email recipients will see the same email in both A and B versions, but each version will feature a different element, for example, a different CTA or button size.

On the other hand, sometimes, when marketers talk about split testing, they are talking about an entire redesign to the content. So in a split test, recipients might receive two entirely different emails, for example, an old and a new design.

When conducting A/B tests or split tests, the goal is to measure the conversion. The one with the highest conversion is considered the winner.

Why is A/B Testing so Important?

The benefits of A/B testing come from the insights that it gains for you. Without it, you’re pretty much just shooting in the dark with what you think your audience will engage with — instead of making informed design and copy decisions based on real-world analytics.

Some of the most direct advantages that you can gain from split testing include:

Better user engagement. Something as small as the size and color of your CTA button can have a huge impact on email engagement. A/B testing lets you see which option garners the most clicks — so you can focus solely on features that are most geared toward audience conversion.

Better conversion rates. Speaking of engagement, the more you can get your audience to engage, the more you can get them to convert. With A/B testing, you can hone in on the content and design features that lead to more conversions — and avoid those that don’t.

Better use of analytics. Looking at your data is one thing; understanding what it means is another. And if you’re not A/B testing, you’re not digging deep enough into what matters. Split testing takes out some key variables, so you don’t have to wonder quite as much about why your email marketing is (or isn’t) performing.

Different Types of A/B Testing in Marketing

A/B testing can be applied to various different marketing strategies. Let’s take a look at the various marketing elements you could be a/b testing.

A/B Testing and Online Ads

A/B testing isn’t only for email marketing. It also helps you learn what works and what doesn’t work with your target audience when running online ads. To A/B test your online ads, you’ll need to create two different versions, send traffic to both versions, and measure the results to see which one performed better.

Your split test should also be tied to a metric that will be dependent on your goal for the ad. If you want people to click on your ad, you will be measuring your tests to see which one gets the highest click-through rate.

In your online ad, the elements to A/B test include:

- Main headline

- Your offer

- Ad image

- Targeting

- Ad format

- Link preview text

- CTA

- Ad media (Image or video)

When setting up your ad A/B test:

- Ensure to drive enough traffic to the ads. If you don’t have enough traffic, getting helpful results from your test will be challenging.

- Test one change at a time. If you test too many things at once, you won’t be able to tell which change brought significant results.

- Be patient. Ads take time to perform, and tests can take some time to run. Being desperate for the test outcome can skew the results of your A/B tests.

A/B Testing and Your Website

Conducting A/B tests on your website is crucial for optimizing your conversion rates. The goal of conducting regular A/B tests is to identify which website version is more effective at getting people to take the desired action, whether that’s signing up for your email list or making a purchase.

By constantly testing and tweaking your web pages and forms, you can identify what is working and what isn’t, then use that knowledge to gradually increase your conversion rate over time and get more leads and sales from your website.

There are several elements on your website that you can A/B test to see how well they perform. These elements include,

- Headlines and subheadlines

- Landing pages

- CTA text

- CTA location

- CTA typeface

- Button placements

- Button sizes

- Layout and design

- Pop-ups

- Featured images

- Sales copy

- The number of fields in a form

- Form offer

- Website navigation

- Social proof

When conducting A/B tests on your website, you should

- Test one element at a time. Due to the large number of elements a website possesses and to save the time needed to conduct subsequent A/B tests, you may use multivariate testing to run concurrent tests on variations of different elements simultaneously.

- Determine a goal the results of the A/B test will fulfill.

- Allow enough time for the test to generate valuable data.

- Keep track of test results and take action based on your results.

A/B Testing and Social Media Posts

Social media is an effective channel to generate leads and drive sales. However, to get the most potential from each social post, the text, images, and CTA must be optimized.

- Post text: Some of the elements of the A/B test include: length, tone of voice, use of emoji, use of punctuation, and use of numbers for social posts linking to numbered lists. What works best for you will depend on your social followers and fans.

- Associated images: You’ve heard before that posts with images perform better than plain text posts. But not every type of image delivers higher engagement. Different target audiences will interact differently with various images. Perform A/B tests for regular images vs. animated GIFs, photos of people vs. product images, and infographics vs. graphics.

- Day and time of sharing: Like email, the day and time that you share a post on social media are critical to the success of your campaigns. In this case, A/B test various sending days and times for each social platform to determine when to share posts.

CTA: The CTA is where you ask readers to engage and take the desired action. Through A/B testing, you can determine the best text and placement to achieve better engagement and conversion.

A/B Testing and Email Marketing

A/B testing your emails is a great way to improve your email marketing campaigns and ensure they’re effective and conversion-focused. When you A/B test your emails, you discover the most effective versions of your emails and understand your audience better by seeing how they respond to different versions of your marketing message.

This, in turn, saves you time and money by helping you focus on the most effective email marketing campaigns. You can also improve your email metrics by using results from testing to create campaigns that boost your email open rates, click-through rates, and, ultimately, your bottom line.

Below are various elements that you can A/B test in your emails.

- Structure

- Email subject line

- Preview text

- Images

- Sender/From name

- Offers

- Length

- CTA

- Segmenting options

- Email design and layout

- Email copy/content

Before A/B testing elements of your emails, you should:

- Develop a hypothesis for the A/B test you want to conduct and what you hope the results will be when you change an element.

- Prioritize the testing ideas that are likely to get you the results you seek with minimum effort.

- Build on the results to improve your email marketing campaigns. Remember, not all of your tests will lead to higher conversions, but you’ll likely learn what not to repeat in each one.

How Email Marketing Software Can Help

The fastest and easiest way to do A/B testing is to utilize email marketing automation and marketing software. Not only does it make it easier to divide your groups and create multiple templates of the same email — it’s also a one-stop-shop for gathering data on your outcomes.

As for other A/B testing tools, so long as you’re using an email marketing platform that provides the service, you won’t have to worry about investing in another piece of software. That’s good news for your budget, as well as for your timeline.

If you have the existing software but aren’t sure how to get started with A/B testing, contact a customer service rep who can guide you through the process. Or, reach out to us directly, and we’d be happy to chat with you about how our email marketing software can help you test your emails and send effective campaigns to your subscribers.